|

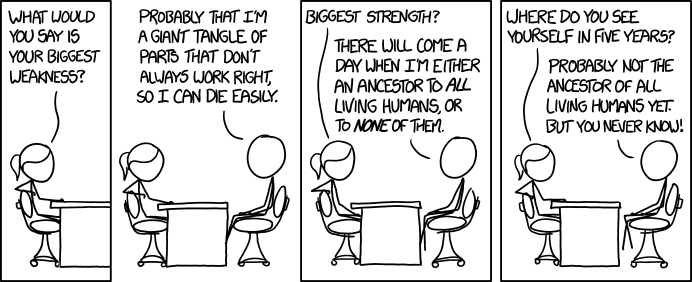

| XKCD: Strengths and Weaknesses |

Have you ever had programming/algorithm interview questions that require you to write code off the top-of-your-head without a keyboard at your fingertips, while in a stressful situation, away from your normal development environment?

I've had a few interviews like that in the past. I usually come up with all sorts of creative answers

... starting right after I finish the interview, as I'm driving away in my car, then hitting a peak the morning after (that's when I really shine!). During the actual interview, my apparent IQ drops by 1/3.

Maybe the reason I suck at "whiteboard coding" in interviews is that I don't practice it. It is nothing like what I actually do for a job, so I would need to suck it up and spend some time practicing "coding quizzes" to do well in such an interview. I would rather skip that and do real work instead.

Also, I have to admit another probable reason as well. I'm just not well-suited for it. I would characterize my general methodology as somewhat measured, thoughtful and methodical to offset my zany, intuitive, artsy tendencies. This is an unlikely teeter-totter balance honed from dauntless decades of determined dedication. Also, there is a certain amount of high-quality coffee required. This juggling act just does not mix well with interviews. Strengths are weaknesses.

Having said all that, I'm going to visit a worst-nightmare example of an interview question for "educational purposes":

THE INTERVIEW QUESTION

Given a list of n+2 numbers, with all numbers in the range of 1..n and all numbers unique except for two numbers which each occur twice, find the duplicate numbers. Use two different methods, each using no more than one loop. Compare and contrast the advantages and disadvantages of each.

To get warmed up, first let's start with the the easiest algorithm I can think of -- one that would be a FAIL because it uses two loops:

Method #1 Nested Loops

Python:

mylist = [1,2,3,3,4,5,6,6,7]

mylist_size = len(mylist)

for x in range (0, mylist_size):

for y in range (x + 1, mylist_size):

if mylist[x] == mylist[y]:

print(mylist[x])

This method does not take advantage of all the provided information: that there are only two repeating elements and the elements are in the range 1..n. It is a more generic and flexible approach, which can adapt to an arbitrary number of repeating elements (not just two) and can also handle lists of numbers outside of the 1..n range. Such flexibility is valuable if the needs are likely to evolve over time, which is a valuable consideration in building real-world systems.

The performance is O(n²), so is directly proportional to the square of the list size (it will gobble up cpu/time). For example, a 1,000 item list only took 6 seconds on my laptop, but a 10,000 item list took over two minutes. I can estimate that a 100,000 item list would take 100 times longer (about 3 1/2 hours).

On a positive note, it requires minimal O(1) state (it will not gobble up disk/memory) and is the simplest of the three methods.

This does not scale out well as it is essentially a cartesian product. It would be problematic to distribute this across many compute nodes as you would need to copy/shuffle the list around quite a bit.

This approach is perhaps well-suited for implementations with tiny data (dozens to hundreds of rows tops), where the code-simplicity outweighs all other concerns. Maybe it is also useful as an example of What Not To Do.

Method #2 loop and dictionary

For the second attempt, let's try to actually provide a solution that will meet the requirements.

Python:

mylist = [1,2,3,3,4,5,6,6,7]

mylist_size = len(mylist)

# create dictionary of counts

count_dict = {}

for x in range(0, mylist_size):

# we have already seen this element, it is a duplicate

if (mylist[x] in count_dict):

print(mylist[x])

else:

# have not seen this element yet, so add to dictionary

count_dict[mylist[x]] = 1

Like method #1, this method does not take advantage of all the provided information: that there are only two repeating elements and that the numbers are in the range 1..n. Similar to method #1 it is also a more generic and flexible approach that can handle similar adaptations.

This approach performs O(n) for cpu/time but also requires O(n) state (memory/disk -- whichever) for the dictionary, so is more efficient in terms of cpu/time and state than method #1, but is more complicated. There are variations that reduce state a little by keeping a dictionary of hashes, or modifying the list itself to mark duplicates.

A 100,000 item list took about 6 seconds on my laptop (I WAG this is ~2,000 times faster than method 1 for the same sized list). I did not bother to measure the memory used by the dictionary, but that would be the limit one would hit first using this method.

Create a system of equations to solve for the two repeating values.

given:

S is sum of all numbers in the list

P is the product of all numbers in the list

X and Y are the duplicate numbers in the list

the sum of integers from 1 to N is N(N+1)/2

the product of integers from 1 to N is N!

When the sum of the list is subtracted from N(N+1)/2, we get X + Y because X and Y are the two numbers missing from set [1..N]:

(1) X + Y = N(N+1)/2 - S

Similarly, calculate the product P of the list. When this product of the list is divided by N!, we get X*Y:

(2) XY = P/N!

Using the above two equations, we can solve for X and Y. I could use an Algebra refresher, so this could probably be done more simply, but here is an example:

For array = 4 2 4 5 2 3 1, we get S = 21 and P = 960.

start with (1) from above X + Y = S - N(N+1)/2 - S

substitute values for S and N

X + Y = (4+2+4+5+2+3+1) - 5(5+1)/2

X + Y = 21 - 15

X + Y = 6

start with (2) from above XY = P/N!

substitute values for P and N and reduce

XY = 960/5!

XY = 8

State (X - Y) in terms of (X + Y) and (XY) to make solving with substitution easier:

restate using square root of a square identity

X - Y = sqrt( (X - Y)² )

expand difference of squares

X - Y = sqrt( X² + Y² - 2XY )

restate xy terms

X - Y = sqrt( X² + Y² + 2XY - 4XY )

factor sum of squares

X - Y = sqrt( (X + Y² - 4XY )

substitute values for P and N and reduce

X - Y = sqrt( 6² - 4*8 )

X - Y = sqrt( 36 - 32)

X - Y = sqrt(4)

X - Y = 2

Now, we have a simple system of two equations to solve:

(3) X + Y = 6

(4) X - Y = 2

add (3) and (4) from above to solve for X

X = ((X - Y) + (X + Y)) / 2

X = (2 + 6) / 2

X = 8 / 2

X = 4

subtract (4) from (3) above to solve for Y

Y = 4 / 2

Y = 2

Python:

Unlike #1 and #2, this method DOES exploit all of the provided information, so is a more specialized and fragile approach. I imagine that this approach could be modified to handle exactly z (1,3, etc..) repeating elements, but it would quickly become very complicated to handle an arbitrary number of repeating elements (some sort of dynamic application of the binomial theorem?). Methods #1 and #2 can do this already without any code changes.

This is by far the most complex approach -- especially if your Algebra is as rusty as mine.

This approach performs O(n) (similar to method #2) for cpu/time as it traverses the list to calculate the sum and product, but requires somewhat less than O(n) state to store the product and sum. There is a relatively large cost to calculating the product that does add up, though. For example, calculating the product of a 100,000 item list in Python took about 6 minutes on my laptop (similar to method #2).

This approach is vulnerable to overflow in the sum and product calculations. Interestingly, overflow is either very unlikely or not possible in pure Python 3 (not Numpy, etc.), but is a real issue in many other languages and environments.

The Oracle database number datatype is 124 digits (enough for only an 83 item list) but only has 38 digit precision, so is really only enough for about a 43 item list), which is barely sufficient for trivial PL/SQL textbook examples.

There are SQL analogues to methods #1 and #2 (the same SQL that declared the solution would result in either a nested loop (method #1) or hash loop (method #2) self-join plan based on what the optimizer knew about the data). Nuff said about that though as it is a whole topic unto itself.

Method #3 is effectively a naive hash that has no collisions but suffers from arithmetic overflow. If I spent time on a more thoughtful hash-based approach, it may be possible to eliminate the overflow concern and I suppose the hash collision concern as well. Using a single hash (not a hash table) to detect duplicates for an arbitrary list is maybe not possible, but at the very least is a Research Problem. This also deserves being treated as a separate discussion topic, so let's ignore that here as well.

There is also a class of solutions that rely on first sorting the list, which then simplifies scanning through list for what are now adjacent duplicates. To me, this could be argued as another variation of Method #2 (with extra work up front) where you modify the list to mark duplicates.

I think there are probably other methods that are not just simple variations on the above themes, but I can't think of them, even after scratching my head on and off for a week.

Nope, I did not do this to practice for interviews, but simply for good, clean fun that segued from me playing around with Python, refreshing my Algebra and struggling through Donald Knuth (yes I am that twisted).

A 100,000 item list took about 6 seconds on my laptop (I WAG this is ~2,000 times faster than method 1 for the same sized list). I did not bother to measure the memory used by the dictionary, but that would be the limit one would hit first using this method.

This approach is straightforward to scale out across many nodes as you can easily partition out the work and the reassemble the results.

I would generally default to this approach as the best-balanced of the three methods here.

Method #3 system of equations

given:

S is sum of all numbers in the list

P is the product of all numbers in the list

X and Y are the duplicate numbers in the list

the sum of integers from 1 to N is N(N+1)/2

the product of integers from 1 to N is N!

When the sum of the list is subtracted from N(N+1)/2, we get X + Y because X and Y are the two numbers missing from set [1..N]:

(1) X + Y = N(N+1)/2 - S

Similarly, calculate the product P of the list. When this product of the list is divided by N!, we get X*Y:

(2) XY = P/N!

Using the above two equations, we can solve for X and Y. I could use an Algebra refresher, so this could probably be done more simply, but here is an example:

For array = 4 2 4 5 2 3 1, we get S = 21 and P = 960.

start with (1) from above X + Y = S - N(N+1)/2 - S

substitute values for S and N

X + Y = (4+2+4+5+2+3+1) - 5(5+1)/2

X + Y = 21 - 15

X + Y = 6

start with (2) from above XY = P/N!

substitute values for P and N and reduce

XY = 960/5!

XY = 8

State (X - Y) in terms of (X + Y) and (XY) to make solving with substitution easier:

restate using square root of a square identity

X - Y = sqrt( (X - Y)² )

expand difference of squares

X - Y = sqrt( X² + Y² - 2XY )

restate xy terms

X - Y = sqrt( X² + Y² + 2XY - 4XY )

factor sum of squares

X - Y = sqrt( (X + Y² - 4XY )

substitute values for P and N and reduce

X - Y = sqrt( 6² - 4*8 )

X - Y = sqrt( 36 - 32)

X - Y = sqrt(4)

X - Y = 2

Now, we have a simple system of two equations to solve:

(3) X + Y = 6

(4) X - Y = 2

add (3) and (4) from above to solve for X

X = ((X - Y) + (X + Y)) / 2

X = (2 + 6) / 2

X = 8 / 2

X = 4

subtract (4) from (3) above to solve for Y

Y = ((X + Y) - (X - Y)) / 2

Y = (6 - 2) / 2Y = 4 / 2

Y = 2

Python:

import math

mylist = [1,2,3,3,4,5,6,6,7]

mylist_size = len(mylist)

n = mylist_size - 2

# Calculate sum and product of all elements in list

s = 0

p = 1

for i in range(0, mylist_size):

s = s + mylist[i]

p = p * mylist[i]

# x + y

s = s - n * (n + 1) // 2

# x * y

p = p // math.factorial(n)

# x - y

d = math.sqrt(s * s - 4 * p)

# solve

x = (d + s) // 2

y = (s - d) // 2

print(int(x))

print(int(y))Unlike #1 and #2, this method DOES exploit all of the provided information, so is a more specialized and fragile approach. I imagine that this approach could be modified to handle exactly z (1,3, etc..) repeating elements, but it would quickly become very complicated to handle an arbitrary number of repeating elements (some sort of dynamic application of the binomial theorem?). Methods #1 and #2 can do this already without any code changes.

This is by far the most complex approach -- especially if your Algebra is as rusty as mine.

This approach performs O(n) (similar to method #2) for cpu/time as it traverses the list to calculate the sum and product, but requires somewhat less than O(n) state to store the product and sum. There is a relatively large cost to calculating the product that does add up, though. For example, calculating the product of a 100,000 item list in Python took about 6 minutes on my laptop (similar to method #2).

This approach is vulnerable to overflow in the sum and product calculations. Interestingly, overflow is either very unlikely or not possible in pure Python 3 (not Numpy, etc.), but is a real issue in many other languages and environments.

The Oracle database number datatype is 124 digits (enough for only an 83 item list) but only has 38 digit precision, so is really only enough for about a 43 item list), which is barely sufficient for trivial PL/SQL textbook examples.

Musings on Other Methods

Method #3 is effectively a naive hash that has no collisions but suffers from arithmetic overflow. If I spent time on a more thoughtful hash-based approach, it may be possible to eliminate the overflow concern and I suppose the hash collision concern as well. Using a single hash (not a hash table) to detect duplicates for an arbitrary list is maybe not possible, but at the very least is a Research Problem. This also deserves being treated as a separate discussion topic, so let's ignore that here as well.

There is also a class of solutions that rely on first sorting the list, which then simplifies scanning through list for what are now adjacent duplicates. To me, this could be argued as another variation of Method #2 (with extra work up front) where you modify the list to mark duplicates.

I think there are probably other methods that are not just simple variations on the above themes, but I can't think of them, even after scratching my head on and off for a week.

Nope, I did not do this to practice for interviews, but simply for good, clean fun that segued from me playing around with Python, refreshing my Algebra and struggling through Donald Knuth (yes I am that twisted).

No comments:

Post a Comment