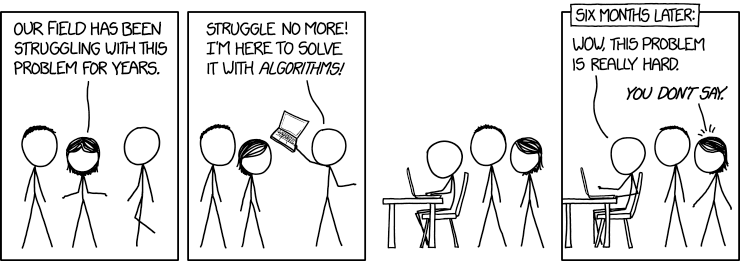

XKCD: Here To Help

|

In a previous blog entry "Grokking the Data Model", I prototyped what appeared to be a reasonably efficient approach at programmatically finding single-column functional dependencies within a database table. I implemented an Oracle SQL prototype and ran it through some basic tests, it appeared to work and possibly be the tiny kernel of something useful.

This post covers the next step as I attempt to turn the idea "hot air" into a useful utility. First, I'll repeat the example table I am using:

Create a simple table and fill with sample data

create table lab_test_rslt (

seq_no number,

subj_no number,

site_no number,

site_name varchar2(35),

test_code varchar2(35),

test_val varchar2(35),

test_rslt varchar2(35),

primary key (seq_no)

);

insert into lab_test_rslt values (1,1,10,'Happy Clinic','HR',60,'OK');

insert into lab_test_rslt values (2,2,10,'Happy Clinic','HR',70,'OK');

insert into lab_test_rslt values (3, 3,10,'Happy Clinic','HR',80,'OK');

insert into lab_test_rslt values (4, 4,10,'Happy Clinic','HR',55,'OK');

insert into lab_test_rslt values (5, 1,20,'Regional Med Ctr','HR',0,'DEATH');

insert into lab_test_rslt values (6,2,20,'Regional Med Ctr','HR',170,'HIGH');

insert into lab_test_rslt values (7,1,30,'Central Hospital','HR',65,'OK');

-- whoops! maybe a typo in site_name!

insert into lab_test_rslt values (8,3,30,'Centrel Hospital','HR',20,'LOW');

commit;

select * from lab_test_rslt order by 1;

SEQ_NO SUBJ_NO SITE_NO SITE_NAME TEST_CODE TEST_VAL TEST_RSLT

1 1 10 Happy Clinic HR 60 OK

2 2 10 Happy Clinic HR 70 OK

3 3 10 Happy Clinic HR 80 OK

4 4 10 Happy Clinic HR 55 OK

5 1 20 Regional Med Ctr HR 0 DEATH

6 2 20 Regional Med Ctr HR 170 HIGH

7 1 30 Central Hospital HR 65 OK

8 3 30 Centrel Hospital HR 20 LOW

8 rows selected. Time to try our current approach out on all columns in our example table

with x as (

select

case when count(distinct subj_no) over (partition by seq_no) = 1 then 1 else 0 end c1_cnt,

case when count(distinct site_no) over (partition by seq_no) = 1 then 1 else 0 end c2_cnt,

case when count(distinct site_name) over (partition by seq_no) = 1 then 1 else 0 end c3_cnt,

case when count(distinct test_code) over (partition by seq_no) = 1 then 1 else 0 end c4_cnt,

case when count(distinct test_val) over (partition by seq_no) = 1 then 1 else 0 end c5_cnt,

case when count(distinct test_rslt) over (partition by seq_no) = 1 then 1 else 0 end c6_cnt,

--

case when count(distinct seq_no) over (partition by subj_no) = 1 then 1 else 0 end c7_cnt,

case when count(distinct site_no) over (partition by subj_no) = 1 then 1 else 0 end c8_cnt,

case when count(distinct site_name) over (partition by subj_no) = 1 then 1 else 0 end c9_cnt,

case when count(distinct test_code) over (partition by subj_no) = 1 then 1 else 0 end c10_cnt,

case when count(distinct test_val) over (partition by subj_no) = 1 then 1 else 0 end c11_cnt,

case when count(distinct test_rslt) over (partition by subj_no) = 1 then 1 else 0 end c12_cnt,

--

case when count(distinct seq_no) over (partition by site_no) = 1 then 1 else 0 end c13_cnt,

case when count(distinct subj_no) over (partition by site_no) = 1 then 1 else 0 end c14_cnt,

case when count(distinct site_name) over (partition by site_no) = 1 then 1 else 0 end c15_cnt,

case when count(distinct test_code) over (partition by site_no) = 1 then 1 else 0 end c16_cnt,

case when count(distinct test_val) over (partition by site_no) = 1 then 1 else 0 end c17_cnt,

case when count(distinct test_rslt) over (partition by site_no) = 1 then 1 else 0 end c18_cnt,

--

case when count(distinct seq_no) over (partition by site_name) = 1 then 1 else 0 end c19_cnt,

case when count(distinct subj_no) over (partition by site_name) = 1 then 1 else 0 end c20_cnt,

case when count(distinct site_no) over (partition by site_name) = 1 then 1 else 0 end c21_cnt,

case when count(distinct test_code) over (partition by site_name) = 1 then 1 else 0 end c22_cnt,

case when count(distinct test_val) over (partition by site_name) = 1 then 1 else 0 end c23_cnt,

case when count(distinct test_rslt) over (partition by site_name) = 1 then 1 else 0 end c24_cnt,

--

case when count(distinct seq_no) over (partition by test_code) = 1 then 1 else 0 end c25_cnt,

case when count(distinct subj_no) over (partition by test_code) = 1 then 1 else 0 end c26_cnt,

case when count(distinct site_no) over (partition by test_code) = 1 then 1 else 0 end c27_cnt,

case when count(distinct site_name) over (partition by test_code) = 1 then 1 else 0 end c28_cnt,

case when count(distinct test_val) over (partition by test_code) = 1 then 1 else 0 end c29_cnt,

case when count(distinct test_rslt) over (partition by test_code) = 1 then 1 else 0 end c30_cnt,

--

case when count(distinct seq_no) over (partition by test_val) = 1 then 1 else 0 end c31_cnt,

case when count(distinct subj_no) over (partition by test_val) = 1 then 1 else 0 end c32_cnt,

case when count(distinct site_no) over (partition by test_val) = 1 then 1 else 0 end c33_cnt,

case when count(distinct site_name) over (partition by test_val) = 1 then 1 else 0 end c34_cnt,

case when count(distinct test_code) over (partition by test_val) = 1 then 1 else 0 end c35_cnt,

case when count(distinct test_rslt) over (partition by test_val) = 1 then 1 else 0 end c36_cnt,

--

case when count(distinct seq_no) over (partition by test_rslt) = 1 then 1 else 0 end c37_cnt,

case when count(distinct subj_no) over (partition by test_rslt) = 1 then 1 else 0 end c38_cnt,

case when count(distinct site_no) over (partition by test_rslt) = 1 then 1 else 0 end c39_cnt,

case when count(distinct site_name) over (partition by test_rslt) = 1 then 1 else 0 end c40_cnt,

case when count(distinct test_code) over (partition by test_rslt) = 1 then 1 else 0 end c41_cnt,

case when count(distinct test_val) over (partition by test_rslt) = 1 then 1 else 0 end c42_cnt

from lab_test_rslt

), x2 as (

select

'seq_no' c1_name_1, 'subj_no' c1_name_2, sum(c1_cnt) / count(*) c1_fd_score,

'seq_no' c2_name_1, 'site_no' c2_name_2, sum(c2_cnt) / count(*) c2_fd_score,

'seq_no' c3_name_1, 'site_name' c3_name_2, sum(c3_cnt) / count(*) c3_fd_score,

'seq_no' c4_name_1, 'test_code' c4_name_2, sum(c4_cnt) / count(*) c4_fd_score,

'seq_no' c5_name_1, 'test_val' c5_name_2, sum(c5_cnt) / count(*) c5_fd_score,

'seq_no' c6_name_1, 'test_rslt' c6_name_2, sum(c6_cnt) / count(*) c6_fd_score,

--

'subj_no' c7_name_1, 'seq_no' c7_name_2, sum(c7_cnt) / count(*) c7_fd_score,

'subj_no' c8_name_1, 'site_no' c8_name_2, sum(c8_cnt) / count(*) c8_fd_score,

'subj_no' c9_name_1, 'site_name' c9_name_2, sum(c9_cnt) / count(*) c9_fd_score,

'subj_no' c10_name_1, 'test_code' c10_name_2, sum(c10_cnt) / count(*) c10_fd_score,

'subj_no' c11_name_1, 'test_val' c11_name_2, sum(c11_cnt) / count(*) c11_fd_score,

'subj_no' c12_name_1, 'test_rslt' c12_name_2, sum(c12_cnt) / count(*) c12_fd_score,

--

'site_no' c13_name_1, 'seq_no' c13_name_2, sum(c13_cnt) / count(*) c13_fd_score,

'site_no' c14_name_1, 'subj_no' c14_name_2, sum(c14_cnt) / count(*) c14_fd_score,

'site_no' c15_name_1, 'site_name' c15_name_2, sum(c15_cnt) / count(*) c15_fd_score,

'site_no' c16_name_1, 'test_code' c16_name_2, sum(c16_cnt) / count(*) c16_fd_score,

'site_no' c17_name_1, 'test_val' c17_name_2, sum(c17_cnt) / count(*) c17_fd_score,

'site_no' c18_name_1, 'test_rslt' c18_name_2, sum(c18_cnt) / count(*) c18_fd_score,

--

'site_name' c19_name_1, 'seq_no' c19_name_2, sum(c19_cnt) / count(*) c19_fd_score,

'site_name' c20_name_1, 'subj_no' c20_name_2, sum(c20_cnt) / count(*) c20_fd_score,

'site_name' c21_name_1, 'site_no' c21_name_2, sum(c21_cnt) / count(*) c21_fd_score,

'site_name' c22_name_1, 'test_code' c22_name_2, sum(c22_cnt) / count(*) c22_fd_score,

'site_name' c23_name_1, 'test_val' c23_name_2, sum(c23_cnt) / count(*) c23_fd_score,

'site_name' c24_name_1, 'test_rslt' c24_name_2, sum(c24_cnt) / count(*) c24_fd_score,

--

'test_code' c25_name_1, 'seq_no' c25_name_2, sum(c25_cnt) / count(*) c25_fd_score,

'test_code' c26_name_1, 'subj_no' c26_name_2, sum(c26_cnt) / count(*) c26_fd_score,

'test_code' c27_name_1, 'site_no' c27_name_2, sum(c27_cnt) / count(*) c27_fd_score,

'test_code' c28_name_1, 'site_name' c28_name_2, sum(c28_cnt) / count(*) c28_fd_score,

'test_code' c29_name_1, 'test_val' c29_name_2, sum(c29_cnt) / count(*) c29_fd_score,

'test_code' c30_name_1, 'test_rslt' c30_name_2, sum(c30_cnt) / count(*) c30_fd_score,

--

'test_val' c31_name_1, 'seq_no' c31_name_2, sum(c31_cnt) / count(*) c31_fd_score,

'test_val' c32_name_1, 'site_no' c32_name_2, sum(c32_cnt) / count(*) c32_fd_score,

'test_val' c33_name_1, 'site_name' c33_name_2, sum(c33_cnt) / count(*) c33_fd_score,

'test_val' c34_name_1, 'test_code' c34_name_2, sum(c34_cnt) / count(*) c34_fd_score,

'test_val' c35_name_1, 'test_rslt' c35_name_2, sum(c35_cnt) / count(*) c35_fd_score,

'test_val' c36_name_1, 'subj_no' c36_name_2, sum(c36_cnt) / count(*) c36_fd_score,

--

'test_rslt' c37_name_1, 'site_no' c37_name_2, sum(c37_cnt) / count(*) c37_fd_score,

'test_rslt' c38_name_1, 'site_name' c38_name_2, sum(c38_cnt) / count(*) c38_fd_score,

'test_rslt' c39_name_1, 'test_code' c39_name_2, sum(c39_cnt) / count(*) c39_fd_score,

'test_rslt' c40_name_1, 'test_val' c40_name_2, sum(c40_cnt) / count(*) c40_fd_score,

'test_rslt' c41_name_1, 'seq_no' c41_name_2, sum(c41_cnt) / count(*) c41_fd_score,

'test_rslt' c42_name_1, 'subj_no' c42_name_2, sum(c42_cnt) / count(*) c42_fd_score

from x

)

select c1, c2, fd_score

from x2

unpivot include nulls (

(c1, c2, fd_score)

for name in (

(c1_name_1, c1_name_2, c1_fd_score),

(c2_name_1, c2_name_2, c2_fd_score),

(c3_name_1, c3_name_2, c3_fd_score),

(c4_name_1, c4_name_2, c4_fd_score),

(c5_name_1, c5_name_2, c5_fd_score),

(c6_name_1, c6_name_2, c6_fd_score),

--

(c7_name_1, c7_name_2, c7_fd_score),

(c8_name_1, c8_name_2, c8_fd_score),

(c9_name_1, c9_name_2, c9_fd_score),

(c10_name_1, c10_name_2, c10_fd_score),

(c11_name_1, c11_name_2, c11_fd_score),

(c12_name_1, c12_name_2, c12_fd_score),

--

(c13_name_1, c13_name_2, c13_fd_score),

(c14_name_1, c14_name_2, c14_fd_score),

(c15_name_1, c15_name_2, c15_fd_score),

(c16_name_1, c16_name_2, c16_fd_score),

(c17_name_1, c17_name_2, c17_fd_score),

(c18_name_1, c18_name_2, c18_fd_score),

--

(c19_name_1, c19_name_2, c19_fd_score),

(c20_name_1, c20_name_2, c20_fd_score),

(c21_name_1, c21_name_2, c21_fd_score),

(c22_name_1, c22_name_2, c22_fd_score),

(c23_name_1, c23_name_2, c23_fd_score),

(c24_name_1, c24_name_2, c24_fd_score),

--

(c25_name_1, c25_name_2, c25_fd_score),

(c26_name_1, c26_name_2, c26_fd_score),

(c27_name_1, c27_name_2, c27_fd_score),

(c28_name_1, c28_name_2, c28_fd_score),

(c29_name_1, c29_name_2, c29_fd_score),

(c30_name_1, c30_name_2, c30_fd_score),

--

(c31_name_1, c31_name_2, c31_fd_score),

(c32_name_1, c32_name_2, c32_fd_score),

(c33_name_1, c33_name_2, c33_fd_score),

(c34_name_1, c34_name_2, c34_fd_score),

(c35_name_1, c35_name_2, c35_fd_score),

(c36_name_1, c36_name_2, c36_fd_score),

--

(c37_name_1, c37_name_2, c37_fd_score),

(c38_name_1, c38_name_2, c38_fd_score),

(c39_name_1, c39_name_2, c39_fd_score),

(c40_name_1, c40_name_2, c40_fd_score),

(c41_name_1, c41_name_2, c41_fd_score),

(c42_name_1, c42_name_2, c42_fd_score)

)

);

C1 C2 FD_SCORE NOTES(see below)

seq_no subj_no 1 (2)

seq_no site_no 1 (2)

seq_no site_name 1 (2)

seq_no test_code 1 (2)(5)

seq_no test_val 1 (3)

seq_no test_rslt 1 (2)

subj_no seq_no 0.125 (1)

subj_no site_no 0.125

subj_no site_name 0.125

subj_no test_code 1 (5)

subj_no test_val 0.125 (1)

subj_no test_rslt 0.125

site_no seq_no 0 (1)

site_no subj_no 0

site_no site_name 0.75 =====> almost FD

site_no test_code 1 (5)

site_no test_val 0 (1)

site_no test_rslt 0.5

site_name seq_no 0.25 (1)

site_name subj_no 0.25

site_name site_no 1 =====> a FD!

site_name test_code 1 (5)

site_name test_val 0.25 (1)

site_name test_rslt 0.75 =====> yet another almost FD?

test_code seq_no 0 (1)(4)

test_code subj_no 0 (4)

test_code site_no 0 (4)

test_code site_name 0 (4)

test_code test_val 0 (1)(6)

test_code test_rslt 0 (4)

test_val seq_no 1 (3)(4)

test_val site_no 1 (2)(4)

test_val site_name 1 (2)(4)

test_val test_code 1 (2)(6)

test_val test_rslt 1 (2)(4)

test_val subj_no 1 (2)(4)

test_rslt site_no 0.375

test_rslt site_name 0.375

test_rslt test_code 0.375 (5)

test_rslt test_val 0.375 (1)

test_rslt seq_no 1 (1)

test_rslt subj_no 0.375

42 rows selected.

Good Grief!

I spent some time wrapping my head around this. This sure is a lot of SQL for analyzing a modest seven column table! That awkwardness may become an issue later (foreshadowing). Well, hopefully I can mitigate some of the pain of the large/ugly SQL by not writing it by hand and generating it instead.

A general-purpose language like Python or Java would be the typical choice to hack away at this with, but I saw a possible opportunity to optimize by bringing the "code to the data" if I could represent a functional dependency algorithm in SQL. Whether Oracle, Postgres, or Spark SQL, a similar approach could be applied to multiple domains.

The above results nicely illustrate a few useful things.

Functional dependencies are not symmetric: C1->C2 does not imply C2->C1. For example Site_no is functionally dependent on site_name, but not vice-versa.

Test_rslt is almost functionally dependent on site_no. Happy Clinic sure does seem happier than anywhere else. Someone may need to look into that :).

I also noticed a lot of false positives and other weirdness that can be leveraged to further optimize the approach. I added a "NOTES" column above and detailed out these cases below.

(1) When C1 is not unique and C2 is unique, then C1!->C2.

If column C1 is not unique, and C2 is unique, then C2 from cannot be functionally dependent on C1.

ex:

C1=* except seq_no C2=test_val

C1=* except test_val C2=seq_no

(2) When C1 is unique and C2 is not unique, then C1->C2.

C1, being unique, is simply a candidate key for the table. Therefore, C2 is functionally dependent on C1.

ex:

C1=test_val C2=* except seq_no

C1=seq_no C2=* except test_val

(3) When both C1 and C2 are unique, then C1->C2 and C2->C1.

ex:

C1=seq_no C2=test_val

C1=test_val C2=seq_no

Analyzing cases with either C1 and/or C2 is unique is a wasted effort; providing no new useful information. A useful approach is to exclude unique columns from FD analysis.

ex:

C1=test_code C2=* except test_val

C1=test_val C2=* except test_code

(5) When C2 has a single value for all rows and C1 does not, then C1->C2.

C2, always having the same value, can be "derived" from anything/nothing. C1 will appear as a "false positive" functional dependency.

ex:

C1=* except test_val C2=test_code

C1=* except test_code C2=test_val

(6) When both C1 and C2 have a single value for all rows, then C1->C2 and C2->C1.

ex:

C1=test_val C2=test_code

C1=test_code C2=test_val

Analyzing cases with either C1 and/or C2 is having single values is a wasted effort; providing no new useful information. A useful approach is to exclude single-value columns from FD analysis.

I also noticed a lot of false positives and other weirdness that can be leveraged to further optimize the approach. I added a "NOTES" column above and detailed out these cases below.

Special cases that can ideally be detected beforehand and excluded from the FD analysis

Uniqueness Cases(1) When C1 is not unique and C2 is unique, then C1!->C2.

If column C1 is not unique, and C2 is unique, then C2 from cannot be functionally dependent on C1.

ex:

C1=* except seq_no C2=test_val

C1=* except test_val C2=seq_no

(2) When C1 is unique and C2 is not unique, then C1->C2.

C1, being unique, is simply a candidate key for the table. Therefore, C2 is functionally dependent on C1.

ex:

C1=test_val C2=* except seq_no

C1=seq_no C2=* except test_val

(3) When both C1 and C2 are unique, then C1->C2 and C2->C1.

ex:

C1=seq_no C2=test_val

C1=test_val C2=seq_no

Analyzing cases with either C1 and/or C2 is unique is a wasted effort; providing no new useful information. A useful approach is to exclude unique columns from FD analysis.

Single-Value Cases

(4) When C1 has a single value for all rows and C2 does not, then C1!->C2.ex:

C1=test_code C2=* except test_val

C1=test_val C2=* except test_code

(5) When C2 has a single value for all rows and C1 does not, then C1->C2.

C2, always having the same value, can be "derived" from anything/nothing. C1 will appear as a "false positive" functional dependency.

ex:

C1=* except test_val C2=test_code

C1=* except test_code C2=test_val

(6) When both C1 and C2 have a single value for all rows, then C1->C2 and C2->C1.

ex:

C1=test_val C2=test_code

C1=test_code C2=test_val

Analyzing cases with either C1 and/or C2 is having single values is a wasted effort; providing no new useful information. A useful approach is to exclude single-value columns from FD analysis.

My first attempt at FD Analyzer

My first attempt is a 527-line fd.sql script for an Oracle >=11 database, that uses a single anonymous PL/SQL block to accept runtime parameters, generate and execute the analysis SQL, then print the results.

Here is what the result looks like. I am running the FD analyzer (fd.sql) against my example schema.table (user lab_test_rslt) and sampling 100% of the data for analysis:

SQL> @fd user lab_test_rslt 100

SITE_NAME -> SITE_NO

PL/SQL procedure successfully completed.

Elapsed: 00:00:00.050Short and sweet, but what is really going on? Here is a second execution with DEBUG and VERBOSE messages enabled to illustrate some of what is going on under the hood:

SQL> @fd user lab_test_rslt 100

>>> VBS: Owner.table: user.LAB_TEST_RSLT>>> VBS:Sample factor (0.000001-100): 100

>>> VBS:FD_Score lower limit (0-1): >=1

>>> VBS:# of Columns before prune: 7

>>> VBS:pruning: 1,SEQ_NO,cnt=8,num_distinct=8,num_nulls=0 Unique ...

>>> VBS:NOT pruning: 2,SUBJ_NO,cnt=8,num_distinct=4,num_nulls=0

>>> VBS:NOT pruning: 3,SITE_NO,cnt=8,num_distinct=3,num_nulls=0

>>> VBS:NOT pruning: 4,SITE_NAME,cnt=8,num_distinct=4,num_nulls=0

>>> VBS:pruning: 5,TEST_CODE,cnt=8,num_distinct=1,num_nulls=0 Only one distinct value ...

>>> VBS:pruning: 6,TEST_VAL,cnt=8,num_distinct=8,num_nulls=0 Unique ...

>>> VBS:NOT pruning: 7,TEST_RSLT,cnt=8,num_distinct=4,num_nulls=0

>>> VBS:# of Columns after prune: 4

>>> VBS:# of column pairs: 12

>>> DBG:get_results();

>>> DBG:1: SUBJ_NO,SITE_NO

>>> DBG:2: SUBJ_NO,SITE_NAME

>>> DBG:3: SUBJ_NO,TEST_RSLT

>>> DBG:4: SITE_NO,SUBJ_NO

>>> DBG:5: SITE_NO,SITE_NAME

>>> DBG:6: SITE_NO,TEST_RSLT

>>> DBG:7: SITE_NAME,SUBJ_NO

>>> DBG:8: SITE_NAME,SITE_NO

>>> DBG:9: SITE_NAME,TEST_RSLT

>>> DBG:10: TEST_RSLT,SUBJ_NO

>>> DBG:11: TEST_RSLT,SITE_NO

>>> DBG:12: TEST_RSLT,SITE_NAME

>>> DBG:with x as (

select /*+ monitor */

case when count(distinct SITE_NO) over (partition by SUBJ_NO)=1 then 1 else 0 end c1,

case when count(distinct SITE_NAME) over (partition by SUBJ_NO)=1 then 1 else 0 end c2,

case when count(distinct TEST_RSLT) over (partition by SUBJ_NO)=1 then 1 else 0 end c3,

case when count(distinct SUBJ_NO) over (partition by SITE_NO)=1 then 1 else 0 end c4,

case when count(distinct SITE_NAME) over (partition by SITE_NO)=1 then 1 else 0 end c5,

case when count(distinct TEST_RSLT) over (partition by SITE_NO)=1 then 1 else 0 end c6,

case when count(distinct SUBJ_NO) over (partition by SITE_NAME)=1 then 1 else 0 end c7,

case when count(distinct SITE_NO) over (partition by SITE_NAME)=1 then 1 else 0 end c8,

case when count(distinct TEST_RSLT) over (partition by SITE_NAME)=1 then 1 else 0 end c9,

case when count(distinct SUBJ_NO) over (partition by TEST_RSLT)=1 then 1 else 0 end c10,

case when count(distinct SITE_NO) over (partition by TEST_RSLT)=1 then 1 else 0 end c11,

case when count(distinct SITE_NAME) over (partition by TEST_RSLT)=1 then 1 else 0 end c12

from user.LAB_TEST_RSLT

), x2 as (

select count(*) c,

sum(c1)/count(*) p1fd,

sum(c2)/count(*) p2fd,

sum(c3)/count(*) p3fd,

sum(c4)/count(*) p4fd,

sum(c5)/count(*) p5fd,

sum(c6)/count(*) p6fd,

sum(c7)/count(*) p7fd,

sum(c8)/count(*) p8fd,

sum(c9)/count(*) p9fd,

sum(c10)/count(*) p10fd,

sum(c11)/count(*) p11fd,

sum(c12)/count(*) p12fd

from x

)

select c, fd

from x2 unpivot include nulls (

(fd) for n in (

p1fd, p2fd, p3fd, p4fd, p5fd, p6fd, p7fd, p8fd, p9fd, p10fd,

p11fd, p12fd )

)

>>> DBG:sql length: 1646

>>> DBG:l_results.count:12

>>> DBG:SUBJ_NO !-> SITE_NO (s=.125 cnt=8)

>>> DBG:SUBJ_NO !-> SITE_NAME (s=.125 cnt=8)

>>> DBG:SUBJ_NO !-> TEST_RSLT (s=.125 cnt=8)

>>> DBG:SITE_NO !-> SUBJ_NO (s=0 cnt=8)

>>> DBG:SITE_NO !-> SITE_NAME (s=.75 cnt=8)

>>> DBG:SITE_NO !-> TEST_RSLT (s=.5 cnt=8)

>>> DBG:SITE_NAME !-> SUBJ_NO (s=.25 cnt=8)

SITE_NAME -> SITE_NO

>>> VBS: (s=1 cnt=8)

>>> DBG:SITE_NAME !-> TEST_RSLT (s=.75 cnt=8)

>>> DBG:TEST_RSLT !-> SUBJ_NO (s=.375 cnt=8)

>>> DBG:TEST_RSLT !-> SITE_NO (s=.375 cnt=8)

>>> DBG:TEST_RSLT !-> SITE_NAME (s=.375 cnt=8)

PL/SQL procedure successfully completed.

Elapsed: 00:00:00.058Does it scale?

Next, let's try a somewhat more challenging table to analyze for functional dependencies to see if my approach scales.

This table has 50 columns and 50,000 rows of data:

This table has 50 columns and 50,000 rows of data:

exec dbms_random.seed(0); drop table t1; create table t1 as select 0 c1, trunc(2 * dbms_random.normal) c2, trunc(2 * dbms_random.normal) c3, trunc(10 * dbms_random.normal) c4, trunc(10 * dbms_random.normal) c5, dbms_random.string('X',2) c6, dbms_random.string('X',8) c7, rownum c8, trunc(100 * dbms_random.normal) c9, trunc(100 * dbms_random.normal) c10, -- 1 c11, trunc(2 * dbms_random.normal) c12, trunc(2 * dbms_random.normal) c13, trunc(10 * dbms_random.normal) c14, trunc(10 * dbms_random.normal) c15, dbms_random.string('X',2) c16, dbms_random.string('X',8) c17, rownum c18, trunc(100 * dbms_random.normal) c19, trunc(100 * dbms_random.normal) c20, -- 2 c21, trunc(2 * dbms_random.normal) c22, trunc(2 * dbms_random.normal) c23, trunc(10 * dbms_random.normal) c24, trunc(10 * dbms_random.normal) c25, dbms_random.string('X',2) c26, dbms_random.string('X',8) c27, rownum c28, trunc(100 * dbms_random.normal) c29, trunc(100 * dbms_random.normal) c30, -- 3 c31, trunc(2 * dbms_random.normal) c32, trunc(2 * dbms_random.normal) c33, trunc(10 * dbms_random.normal) c34, trunc(10 * dbms_random.normal) c35, dbms_random.string('X',2) c36, dbms_random.string('X',8) c37, rownum c38, trunc(100 * dbms_random.normal) c39, trunc(100 * dbms_random.normal) c40, -- 4 c41, trunc(2 * dbms_random.normal) c42, trunc(2 * dbms_random.normal) c43, trunc(10 * dbms_random.normal) c44, trunc(10 * dbms_random.normal) c45, dbms_random.string('X',2) c46, dbms_random.string('X',8) c47, rownum c48, trunc(100 * dbms_random.normal) c49, trunc(100 * dbms_random.normal) c50 from dual connect by level <= 50000; exec dbms_stats.gather_table_stats(user, 'T1');

The resulting table data looks like 50 columns * 50,000 rows of this:

SQL> select * from t1 where rownum < 11;

C1 C2 C3 C4 C5 C6 C7 C8 C9 C10 C11 C12 C13 C14 C15 C16 C17 C18 C19 C20 C21 C22 C23 C24 C25 C26 C27 C28 C29 C30 C31 C32 C33 C34 C35 C36 C37 C38 C39 C40 C41 C42

C43 C44 C45 C46 C47 C48 C49 C50

0 -1 -1 -1 -6 GY XTG9HRTG 1 -48 -103 1 1 1 -9 2 41 DY9P0VZ0 1 -26 -100 2 0 0 11 -4 DB R4OC4JOX 1 -19 -79 3 2 0 -11 4 RG TXUKDWWX 1 49 -88 4 0

-3 -21 28 US Y8K1VNS2 1 93 -141

0 1 -1 1 7 5N YQ8N1DIO 2 32 -33 1 0 -3 -17 -18 N1 9KJVSHV2 2 -77 -1 2 0 0 13 0 0P 6V6GXE7B 2 71 34 3 -1 0 -10 -9 CZ Q4RY4USA 2 -108 -65 4 0

0 9 2 32 HCTVD58M 2 -22 -58

0 1 -1 15 13 SY CT0BQDS3 3 -14 -23 1 3 0 -8 5 5Z Q41W2V3S 3 -1 -36 2 -3 0 3 7 XT F9G1YA5Z 3 -32 -33 3 2 -1 5 7 UF O337E2NW 3 46 -45 4 1

-1 -6 -8 UZ 2W57U5YC 3 -33 -73

0 -1 -3 0 16 A0 1PFRZ9E8 4 -123 54 1 0 -1 -4 -6 WP IXXKNG7W 4 104 -32 2 -3 0 -8 0 Q8 V3T74TF4 4 -44 29 3 3 0 13 -14 BN PM420DX7 4 -32 -66 4 0

-1 -2 5 92 C0JY11BK 4 18 58

0 -5 -1 15 -4 M0 28LRLTA4 5 -69 99 1 0 0 6 -4 79 IOEN7CJL 5 28 154 2 2 0 -12 -5 B9 WXQK7NSI 5 27 -50 3 0 -2 4 4 7Y 552UCMT5 5 42 -231 4 -4

0 1 22 1U PSX1WZS8 5 53 61

0 -1 0 3 17 WU EKZ5FEB4 6 -38 20 1 0 1 -19 -10 VC 7OT06XYV 6 121 3 2 -2 -3 10 -1 8S 3IRG90W0 6 49 -59 3 0 0 12 12 50 PZRQNVQ9 6 -66 -18 4 0

-3 11 1 W5 81BMFSCZ 6 88 38

0 0 0 2 -10 WJ H52IBY15 7 57 -35 1 0 0 2 13 60 85S3FI0K 7 21 63 2 0 -3 13 3 IK S6IXB3B2 7 255 68 3 0 1 -6 2 RC LUQETJA1 7 170 -4 4 -1

0 -15 1 20 10NGVIAO 7 12 82

0 3 1 -13 3 I4 S1I5M01B 8 12 -44 1 0 0 -4 1 XR LX5206RF 8 -36 28 2 -2 0 7 22 OU J4C13AIB 8 45 -225 3 -4 0 10 -11 JC NIAJHI6Q 8 57 -54 4 2

0 11 -9 W0 5FQ1139O 8 -79 -204

0 3 -1 17 3 DR AG205VLD 9 -60 -106 1 0 2 15 -15 CO S3IQES03 9 -71 7 2 0 -2 2 6 KU 6HDHMCUN 9 -13 237 3 -1 -2 -1 -12 64 CEV6D187 9 -19 -94 4 0

0 -1 -1 OS VQ5EGDDW 9 -99 -189

0 -2 -2 -17 -9 XE A1YT2MLU 10 -29 168 1 5 2 -7 -21 CH NWJJ0RQ7 10 -92 -389 2 -2 0 12 -15 IR 020QEHLL 10 -71 85 3 -2 1 13 -10 6O LSI4DOY3 10 238 -43 4 2

0 -3 5 UY 3SXFEAJL 10 -75 56

10 rows selected.

As is usually the case, I encountered several limitations that needed to be addressed once I started trying to analyze tables that were not trivial textbook examples:

- I began with using a VARCHAR2 datatype for the analysis SQL that I was generating, but had to change up to a CLOB as VARCHAR2s are limited to 32,767 characters and I very quickly exceeded this limit with the bulky, repetitive SQL I was generating.

- I had to limit the number of column pairs to analyze in a single SQL statement to avoid ORA-01792: maximum number of columns in a table or view is 1000. A table with 33 columns results in 1056 permutations of column pairs, which is TOO MANY. I mitigated this with batching into an analysis of 700 column permutations per SQL statement. Unfortunately, more SQL statements require more table scans, slowing down the analysis.

- I experimented with choosing a random sample of rows to driven by a sample percentage (0.000001 - 100 where 100=ALL) as per Oracle SAMPLE() modifier. This seems to have limited use as I cannot see how the result from a sample is statistically useful in this case.

- I started with running additional SQL to determine column cardinality (unique, single-values, etc.), but changed to leverage existing Oracle table stats instead. This works great as long as I remember to run dbms_status.gather_table_stats();

- I also experimented with partial functional dependencies by introducing a g_fd_score_lower_limit variable that could be set to 1.0 to find strict FDs only, or set to lower values to find "almost FDs" as well. This does seem like it might be useful.

My environment was Oracle 12.2.0.1, on Redhat, a Dell PowerEdge R730XD with 16 cores. Here is what the execution profile looks like when I fire up this lights-dimming monster:

I'll spare you the 3,622 debug & verbose stdout here, but if you want to see the whole tamale:

fd_XXX_t1_100.log

Fd.sql pruned 11 of the 50 columns, leaving 39 columns to analyze, resulting in 1,482 column pairs, any of which could potentially be a functional dependency. Without pruning, there would be 2,450 column pairs to analyze (n!/(n-2)! where n = the number of columns), so pruning reduced the work to do by about 40%.

As noted above, the resulting SQL was so large, that, to avoid ORA-01792: maximum number of columns in a table or view is 1000, fd.sql split the analysis into three SQL statements; the first two for 700 column pairs each and the 3rd for the final eighty-two pairs. If you squint at the above SQL Execution profile, you can see two large humps for the first two SQL statements (apparently the 3rd statement was too trivial to appear).

The time the database spent doing work was primarily CPU (63%), secondarily I/O (37%). There was 331GB of I/O, almost evenly split between reads (55%) and writes (45%).

Under the Hood

I also captured an Oracle trace for a different execution against a smaller 10,000 row version of the 50 column table in a sandbox Oracle instance on my laptop. This allowed for a more detailed inspection of what Oracle was doing under the hood.

This execution took about 16 minutes, 5 minutes of which were spent just parsing several absolutely ridiculous multi-thousand-line SQL selects. Looking at the trace, you can tell that most of the CPU is the result of several thousand sorts.

Here is the trace, the tkprof with no sort, and tkprof sorted by execution time:

In summary, my optimizations reduced redundant table scans, but that ended up being the least important part of the overall workload. The real work was the many DISTINCT COUNT operations, each requiring a SORT. There are n!/(n-2)! SORTS required, where n = number of columns.

In summary, my optimizations reduced redundant table scans, but that ended up being the least important part of the overall workload. The real work was the many DISTINCT COUNT operations, each requiring a SORT. There are n!/(n-2)! SORTS required, where n = number of columns.

This algorithm is a textbook example of an O(n²) complexity: as the number of inputs increases linearly, the time to compute expands exponentially.

I need a better algorithm.

Next Steps (to be addressed in a future post)

We have now taken the original prototype from "Grokking the Data Model" and used it as the basis for a first attempt at an FD Analyzer utility. Everything looked hunky-dory (technical term for groovy) until we tried to use it against a table that was not a trivial textbook example, then the harsh reality of O(n²) algorithms crashed the party.

The next step is to find a better algorithm, or at least understand why a better algorithm cannot be found. I suspect that my favorite utility will be useful (https://lmgtfy.com/)

More to come in a future post.

More to come in a future post.